Machine Learning in Python: Main developments and technology trends in data science, machine learning, and artificial intelligence – arXiv Vanity

Information | Free Full-Text | Machine Learning in Python: Main Developments and Technology Trends in Data Science, Machine Learning, and Artificial Intelligence

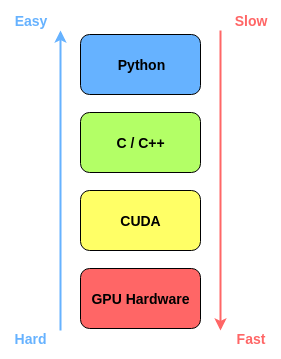

.png)